By Dan Middleton, Intel Senior Principal Engineer and Chair, CCC Technical Advisory Council

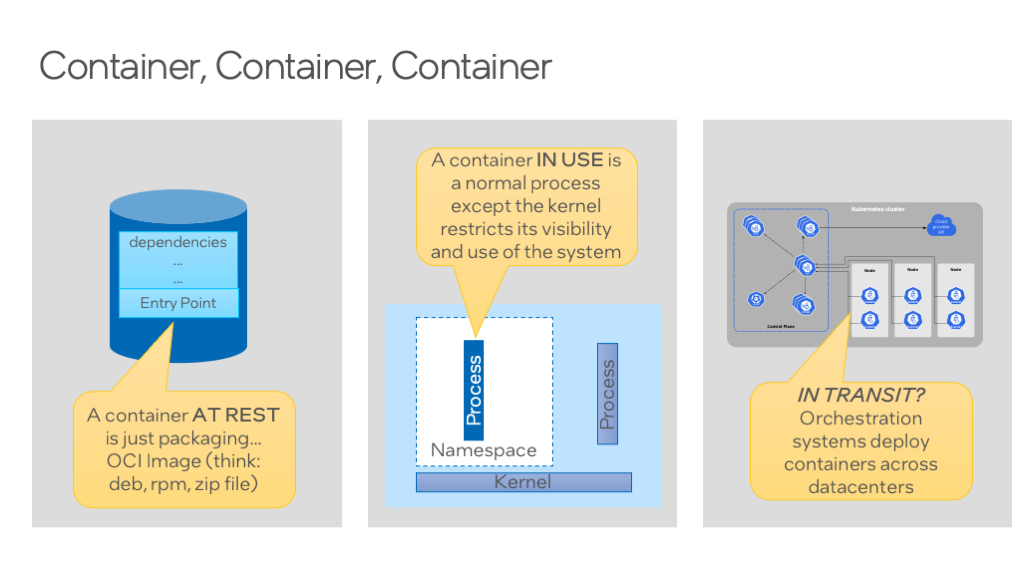

The term container can be ambiguous. Here are 3 different representations of what people might mean by a container.

I’m often posed with questions about Confidential Computing and containers. Often, the question is something to the effect of, “Does Confidential Computing work with Containers?” or “Can I use Confidential Computing without redesigning my containers?” (Spoilers: Yes and Yes. But it also depends on your security and operational goals.)

The next question tends to be, “How much work will it be for me to get my containerized applications protected by Confidential Computing?” But there are a lot of variations to these questions, and it’s often not quite clear what the end goal is. Part of the confusion comes from “container” being a sort of colloquialism; it can mean a few different things depending on the context.

In Confidential Computing, we talk about the protection of data in use, in contrast with the protection of data at rest or in transit. So, if we apply the same metaphor to containers, we can see three different embodiments of what a container might mean.

In the first case, a container is simply a form of packaging, much like a Debian file or an RPM. You could think of it as a glorified zip file. It contains your application and its dependencies. This is really the only standardized definition of a container from the OCI image spec. There’s not a lot of considerations for packaging that are relevant for Confidential Computing, so this part is pretty much a no-op.

The next thing people might mean when they talk about a container is that containerized application during runtime. That container image file included an entry point which is the process that’s going to be launched. Now, that process is also pretty boring. It’s just a normal Linux process. There’s no special layer intermediating instructions like a JVM or anything like that. The thing that makes it different is that the operating system blinds the process from the rest of the system (namespacing) and can restrict its resources (cgroups). This is also referred to as sandboxing. So again, from a Confidential Computing perspective, there’s nothing different that we would do for a container process than what we would do for another process.

However, because the container image format and sandboxing have become so popular, an ecosystem has grown up around these providing orchestration. Orchestration is another term that’s used colloquially. When you want to launch a whole bunch of web applications spread across maybe a few different geographies, you don’t want to do that same task 1000 times manually. We want it to be automated. And so, I think 90% of the time, maybe 99% of the time, that people ask questions about containers and Confidential Computing, they’re wondering whether Confidential Computing is compatible with their orchestration system.

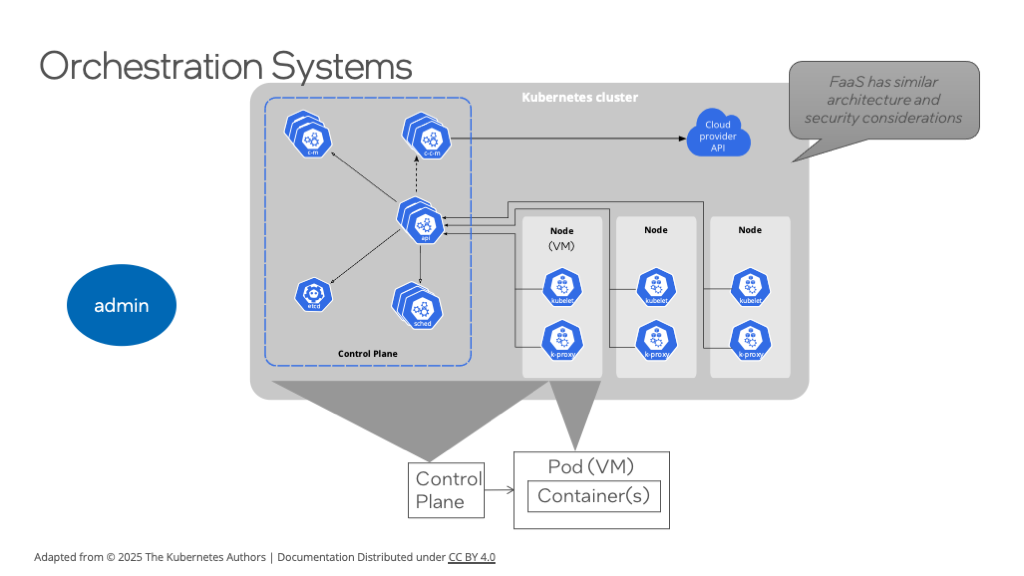

Administrative users operate a control plane which starts and stops containers inside nodes (which are often virtual machines). A Pod is a Kubernetes abstraction which has no operating system meaning – it is one or more containers each of which is a process.

One of the most popular orchestration systems is Kubernetes (K8s for short). Now, there are many distributions of K8s under different names, and there are many orchestration systems that have nothing to do with K8s. But given its popularity, let’s use K8s as an example to understand security considerations.

For our purposes, we’ll consider two K8s abstractions: the Control Plane and Nodes. The Control Plane is a collection of services that are used to send commands out to start, monitor, and stop containers across a fleet of nodes. Conventionally, a node is a virtual machine, and your containerized applications can be referred to as pods. From an operating system perspective, a pod is not a distinct abstraction. It’s sufficient for us to just think of a pod as one or more containers or equivalently one or more Linux processes. So, we have this control plane, which are a few services that help manage the containers that are launched across a fleet of virtual machines.

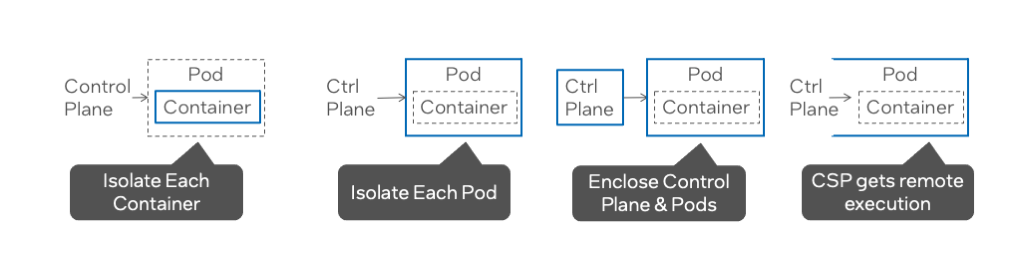

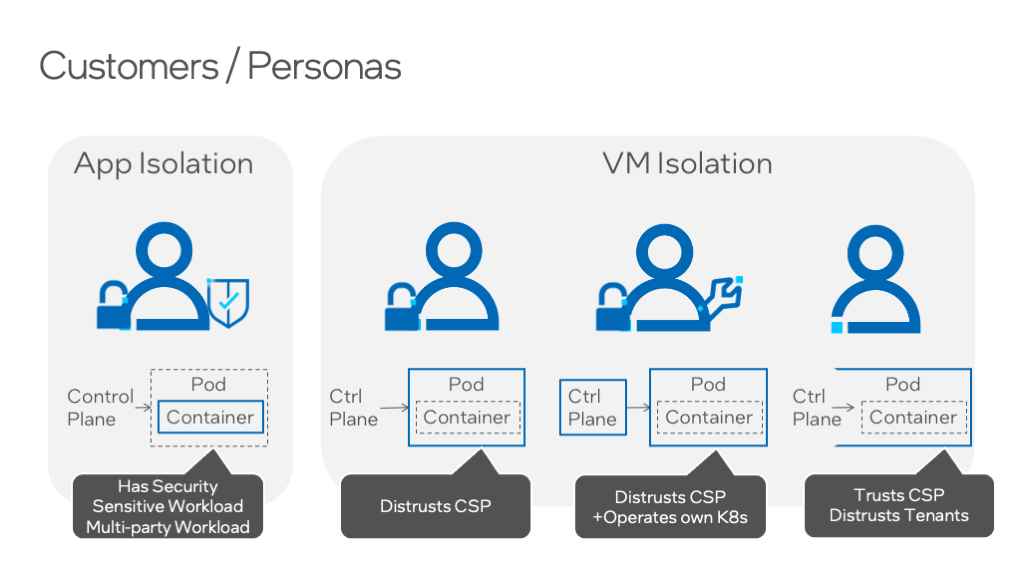

Now we can finally get into the Confidential Computing-related security considerations. If we were talking about adversary capabilities, the Control Plane has remote code execution, which is about as dangerous as an attacker can be. But is the Control Plane an adversary? What is it that we really want to isolate here, and what is it that we trust? There are any number of possible permutations, but they really collapse down to about four different patterns.

Four isolation patterns that recognize different trust relationships with the control plane.

In the first pattern, we want to isolate our container, and we trust nothing else. In the second case, we may have multiple containers on the same node that need to work together, and so our isolation unit we could think of as a pod, but it’s more properly or more pragmatically a virtual machine. Now, in both of these cases, but especially the second, the control plane still has influence over the container and its environment, no matter how it’s isolated. To be clear, the control plane can’t directly snoop on the container in either case, but you may want to limit the amount of configuration you delegate to the the control plane.

And so, in the third case, we put the whole control plane, which means each of the control plane services, inside a Confidential Computing environment. Maybe more importantly, we operate the control plane ourselves removing the 3rd party administrator entirely. It’s commonly the case, though, that companies don’t want to operate all of the K8s infrastructure by themselves, and that’s why there are managed K8s offerings from cloud service providers. And that brings us to our last case, where we decide that we trust the CSP, and we’re just going to sort of ignore the fact that the control plane has remote code execution inside what is otherwise our isolated VM for our pods or containers.

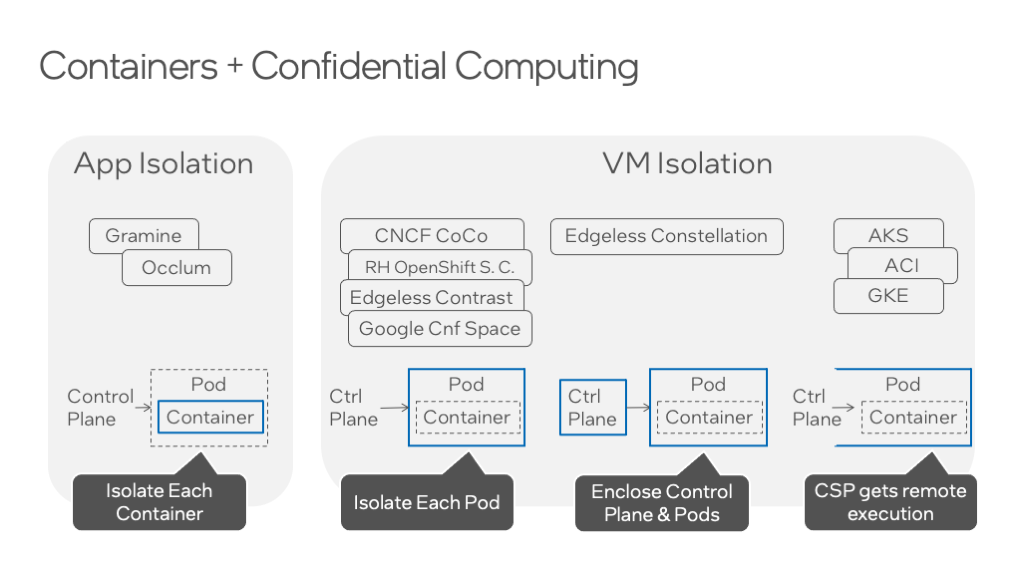

Process and VM Isolation examples with associated open source and commercial projects.

Let’s make this a little bit more concrete with some example open-source projects and commercial offerings. The only way to actually isolate a container, which means isolating a process, is with Intel® Software Guard Extensions (Intel® SGX) using an open-source project like Gramine or Occlum. So, if we come back to the question, “How much work do I have to do here?” there is at least a little bit of work because you’ll use these frameworks to repackage your application. You don’t have to rewrite your application, you don’t have to change its APIs, but you do need to use one of these projects to wrap your application in an enclave. This arguably gives you the most stringent protection because here you are only trusting your own application code and the Gramine or Occlum projects.

To the right, your next choice could be to isolate by pod. In practice, this means to isolate at the granularity of a virtual machine (VM). Using an open-source project like CNCF Confidential Containers (CoCo) lets you take your existing containers and use the orchestration system to target Confidential Computing hardware. CoCo can also target Intel® SGX hardware using Occlum, but more commonly CoCo is used with VM isolation capabilities through Intel® Trust Domain Extensions (Intel®TDX), AMD Secure Encrypted Virtualization (SEV)*, Arm Confidential Computing Architecture (CCA)*, or eventually RISC-V CoVE*. And there’s a little bit of work here too. You don’t have to repackage your application, but you do need to use this enlightened orchestration system from CoCo (or a distribution like Red Hat OpenShift Sandbox Containers*). These systems will launch each pod in a separate confidential virtual machine. They have taken pains to limit what the control plane can do and inspect, and there is a good barrier between the CVM and the control plane. However, it is a balancing act to limit the capabilities of the control plane when those capabilities are largely why you are using orchestration to begin with.

Edgeless Systems Constellation* strikes a little different balance. If you don’t want to trust the control plane but you still want to use CSP infrastructure or some other untrusted data center, Constellation will run each control plane service in a confidential VM and then also launch your pods in confidential VMs. But operating K8s isn’t for everyone, so when it comes to how much work is involved, it depends on whether you operate k8s or not. If you don’t normally operate k8s then this would be a significant increase. There are no changes that you need to make to your applications, though, and if your company is already in the business of operating their own orchestration systems, then there’s arguably no added cost or effort here.

But for those organizations who do rely on managed services from CSPs, you can make use of confidential instances in popular CSPs such as Azure Kubernetes Service (AKS)* and Google Kubernetes Engine (GKE)*. And this is generally very simple, like checkbox simple, but it comes with a caveat that you do trust the CSP’s control of your control plane. Google makes this explicit in some very nice documentation: (https://cloud.google.com/kubernetes-engine/docs/concepts/control-plane-security).

Now, which one of these four is right for your organization depends on the things that we’ve just covered, but also a few other considerations. The user that chooses container isolation generally is one that has a security-sensitive workload where a compromise of the workload has real consequences. They might also have a multiparty workload where management of that workload by any one of those parties works against the common interests of that group.

Typically, those with security sensitive or multiparty workloads will isolate at process granularity. VM isolation can be implemented differently based on whether the control plane is trusted or not.

Users of CNCF Confidential Containers probably don’t fully trust the CSP, or they want defense in depth against the data center operator, whether that’s a CSP or their own enterprise on-prem data center. More importantly they probably only want to deploy sensitive information or a cryptographic secret, if they can assess the security state of the system. This is called a Remote Attestation. Attestation is a fun topic and one of the most exciting parts of Confidential Computing, but it can be an article unto itself. So, to keep things brief, we’ll just stick with the idea that you can make an automated runtime decision whether a system is trustworthy before deploying something sensitive.

Now let’s look at the last two personas on the right of the diagram. Users of Constellation may not trust a CSP, or they may use a multi-cloud hosting strategy where it’s more advantageous for them to operate the K8s control plane themselves anyway. For users of CSP managed K8s, the CSP does not present a risk but the user certainly wants defense in depth protections against other tenants using that same shared infrastructure. In these latter two cases, Remote Attestations may also be desired, but used in more passive ways. For example, from an auditing perspective, logging the Attestation can show compliance that an application was run with protection of data in use.

In this article, we’ve covered more than a few considerations, but certainly, each of these four patterns has more to be understood to make an informed choice when it comes to security and operational considerations. I hope that this arms you, though, with the next set of questions to go pursue that informed choice.

[Edit 3/7: Clarified control plane influence per feedback from Benny Fuhry.]

Legal Disclaimers

Intel technologies may require enabled hardware, software or service activation.

No product or component can be absolutely secure.

Your costs and results may vary.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.

Adopting on-demand computing for sensitive and private workloads is critical in today’s interconnected and data-driven world. We need simple, fast, and reliable security mechanisms to protect data, code, and runtimes to achieve this. The Confidential Computing Consortium’s new

Adopting on-demand computing for sensitive and private workloads is critical in today’s interconnected and data-driven world. We need simple, fast, and reliable security mechanisms to protect data, code, and runtimes to achieve this. The Confidential Computing Consortium’s new

The

The  At ACSAC 2024 (Annual Computer Security Applications Conference), the esteemed Cybersecurity Artifact Award was presented to the “Rapid Deployment of Confidential Cloud Applications with Gramine” project for its innovative approach to enhancing cloud security. The project stood out for enabling the secure deployment of confidential applications in cloud environments while ensuring the protection of sensitive data.

At ACSAC 2024 (Annual Computer Security Applications Conference), the esteemed Cybersecurity Artifact Award was presented to the “Rapid Deployment of Confidential Cloud Applications with Gramine” project for its innovative approach to enhancing cloud security. The project stood out for enabling the secure deployment of confidential applications in cloud environments while ensuring the protection of sensitive data.